Personal Data Detection - KG Competitors

INFO 523 - Spring 2024 - Project Final

Shashank Yadav

Gorantla Sai Laasya

Maksim Kulik

Remi Hendershott

Surya Vardhan Dama

Kommareddy Monica Tejaswi

Priom Mahmud

Gorantla Sai Laasya

Maksim Kulik

Remi Hendershott

Surya Vardhan Dama

Kommareddy Monica Tejaswi

Priom Mahmud

Introduction

- Our team is participating in a Kaggle competition in which the primary goal is to develop a model capable of detecting personally identifiable information (PII) in student writing.

- Our task focuses on word-level classification, where each word in a text belongs to one of several predefined categories, akin to a multiclass classification problem. This approach is commonly known as ‘Named Entity Recognition’.

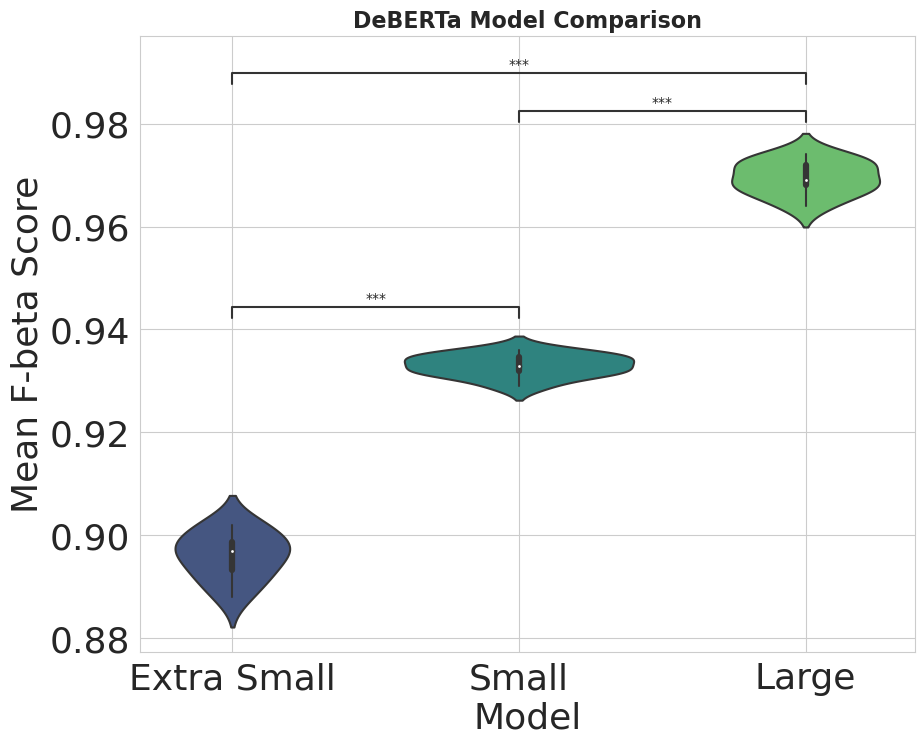

- Our process involved creating and running three DeBERTa models of various sizes (extra small, small, and large) to solve the Kaggle objective, while being able to compare our three models and determine which one works best.

Our Questions to Solve

- Can we develop a model that successfully detects personally identifiable information (PII) in student writing?

- How can we evaluate the model’s performance effectively? Which metrics are most appropriate for PII detection tasks?

Dataset

- Approximately 22,000 student essays, with each essay responding to a single assignment prompt, where each word in a text is assigned to one of 13 predefined labels.

- The key variables in the dataset include tokens representing simplified word forms, each associated with these labels.

- Each label can be prefaced by either B (beginning) or I (inner), which is a way to tell whether a token is the first word of an entity or its continuation. The majority of tokens are labelled as “O”, (outer) meaning they do not constitute PII.

- Preprocessing: The text is tokenized using DeBERTa’s tokenizer to ensure that named entities are correctly identified and segmented.

Variables of the Dataset

Methodology

- Tokenization: Tokenize the raw text using the appropriate DeBERTa tokenizer.

- Fine-Tuning: Model are be fine-tuned on the same training set with a very small learning rate 6e-6. KerasNLP python package was used with JAX backed. Models were fine tuned on a Nvidia 4070Ti GPU.

- Evaluation: Assessed each model’s performance on the validation set F5-score. In the F5 score, recall is considered five times as important as precision. This means the F5 score is particularly useful in our case where missing out on positive cases (false negatives) is much more problematic than incorrectly labeling negative cases as positive (false positives) since there is a huge class imbalance.

- Comparison: Compared the performance and resource utilization of each model.

Model Summary

| Model Configuration | Parameters (Millions) | Training Details | Expected Advantage |

|---|---|---|---|

| DeBERTa-v3 Extra Small | 70.68 | - smaller batch size - maximize model efficiency |

- lower computational requirements - suitable for limited resources |

| DeBERTa-v3 Small | 141.30 | - moderate batch size - balanced computational load and performance |

- better than extra small model with manageable resource use |

| DeBERTa-v3 Large | 434.01 | - larger batch size - extended training periods |

- Highest accuracy and performance - suitable for resource-abundant scenarios |

Results

- The Extra Small DeBERTa model offers the fastest inference time but at the cost of lower F5 score.

- The Small DeBERTa model provides a balanced performance with reasonable inference times and improved F5 Score over the extra small variant.

- The Large DeBERTa model achieves the highest F5 score, reflecting its larger model capacity and outperformance.

Results

Conclusions

- Regarding our research goals and inquiries, we can indeed construct a model capable of accurately detecting personally identifiable information (PII) in student writings. To assess the efficiency of this model, we designed three different models of varying sizes and evaluated their performance to determine which was most effective.

- We compared their F5 scores and took their inference times into consideration. It became apparent that the large DeBERTa model achieved the highest F5 score, thereby emerging as the most effective model overall.

- However, the choice of model depends on the specific needs for efficiency and performance. For limited-resource environments, the Extra Small or Small models are recommended. For maximum accuracy, where resources are plentiful, the Large model is the best choice.

References

- [1] Our Kaggle Competition Info can be found here: https://www.kaggle.com/competitions/pii-detection-removal-from-educational-data/overview

- For title page image: https://www.freepik.com/free-vector/fingerprint-concept-illustration_10258681.htm#query=personal%20data&position=49&from_view=keyword&track=ais&uuid=a14f34fb-adf2-45bc-a8f5-ae2b66197593

- Our logo: https://icons8.com/icons/set/competition

- Detect Fake Text: KerasNLP [TF/Torch/JAX][Train]

- Token classification

- Transformer ner baseline [lb 0.854]

- Thank You image: https://www.freepik.com/free-vector/thank-you-concept-illustration_34680609.htm#page=2&query=thank%20you%20gif&position=29&from_view=keyword&track=ais&uuid=e51f94e5-1758-47eb-8cb9-8d69209b8ffd

![]()